“We are what we repeatedly do. Excellence, then, is not

One of the important goals for software organizations is to create more efficient, repeatable and predictable processes that serve as a long term sustainable methodology for the business. The set of process is referred to as the Software Development Life Cycle (SDLC) for such organization. The SDLC process plays a very important role in shaping the success of a software company. It defines the culture and pace of the product team.

Early SDLC

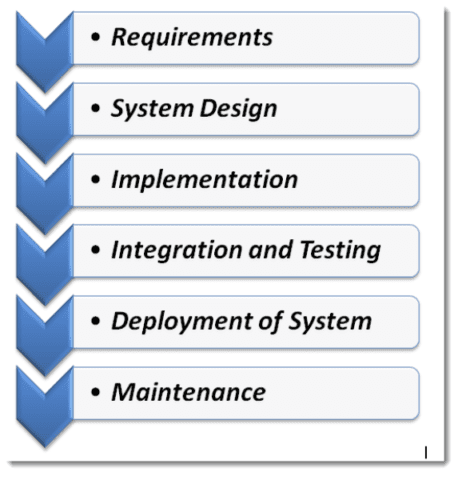

SDLC methodologies have been in practice for a while with one of earliest one being the waterfall model. In this model, the process flows from one stage to other with strict dependency on the previous process to be completed. Early integration testing relied more on manual testing and often was carried out at the end of the cycle just days before or even on the day of the release. For example, as shown in the figure below, the integration testing phase cannot be started without entire coding phase is completed by all teams. This is because the cost involved in manual integration testing multi-folds, if the testing is done as when teams complete their coding. The QA team will have to run those tests over and over again.

Image: Waterfall SDLC method (Source)

The shortcomings of waterfall method were improved to an extent with the advent of automation frameworks like Selenium for frontend code and developer written unit tests for backend code. However, the integration tests were still not run until the code was available in a common release branch (or) repository. Also these tests run on an infrastructure separate from development and maintained by QA team who had to automate their previous manual testing. Typically, these integration tests run on nightly basis and take batch of changes for the day and run those tests. This process also relies on someone taking initiative of finding the root cause for any failure and following up with relevant team/person. Both the above processes were costly and very often become a mega event for release cycles. This also means organizations cannot release as and when they wish to. They will have to have multiple manual checks on the process involving complex release management and persons to ensure quality for regular and patch releases.

Here is a funny image that describe the mood on the release day or night when things do not go as planned:

Modern SDLC

The modern SDLC processes have evolved from their predecessors. Once such modern SDLC is the agile development process that rely on providing quick and automated feedback to the developers early in the cycle and in a continuous way. This continuous integration (CI) feedback loop reduces the risk of regression bugs creeping into the product in the least expensive way. We will discuss more in detail on some of the tools can help in achieving this efficient CI process. The tools mentioned here are not the complete list but can be relevant for most of the software organizations. Also the combinations are not fixed and can differ.

Gerrit

About:Gerrit is a tool developed by Google to facilitate code reviews before it gets merged into the team or master repository. Gerrit can also be configured to run a Jenkins job to run unit tests and mark the code change as verified before a reviewer can review it. More about Gerrit, https://www.gerritcodereview.com/

Gerrit plays a crucial role in a typical day-to-day engineering team development. Developers would make their code changes and would write unit tests for any new changes. Once done with the changes, they would rebase with latest from Gerrit stable (master) branch. After rebase, the developers would submit their changes to Gerrit for review via git push command thats integrated to Gerrit. All such submissions are referred as Change Requests (CR). Gerrit would then pick the CR and run the suite of tests (both regression unit tests and integration tests as per configuration).

The tests can be hosted on a CI/CD tool like Jenkins. On successful completion the CR would be marked as verified. Similarly any breakage in the Jenkins job for tests would mark the CR as not verified (-ve vote). This is a very important and effective way to catch regressions way before any other person other than the developer is involved. This cuts down maintenance cost by a huge amount. For verified CRs, the reviewers now can review the changes and can provide their feedback. They can also verify for the unit tests coverage and review for improvements.

The developers can rebase and submit the same CR for multiple iterations until its both verified by CI (Jenkins) and the reviewer(s) approve it. Most of the time two review points are required to complete the review. This is to cover cases where two or more reviewers need to accept the CR.

One other advantage (and recommendation) about these CRs is to submit them in regular and smaller chunks and in focused code areas. This makes sure the CR is concise for review and easy for reviewer to understand. This also greatly alleviates the risk of a big breaking change later in the cycle. With smaller changes it is easy to pick a point when things are not working in case a regression creep in. The smaller and frequent changes are also useful when multiple persons are working on a feature, so that the cycle is faster. For example, backend APIs available for UI team consumption as and when they are ready.

Jenkins (CI/CD)

Jenkins has been around for a while and most of us are already familiar and would have come across Jenkins at least once in our career. Jenkins can be used for automating recurring jobs. Among many types of Jenkins jobs there are two main ones. One is for CI (to run unit and integration tests) and other is for CD (to package and deploy the product). CI jobs can be run at multiple checkpoints, one job run for every CR from Gerrit to ensure regression tests are fine and on the other hand, less frequent and long running tests that run a broader complex set and cover more test scenarios can be run nightly or any other frequency based on the complexity and needs.

Docker and containerization

One of the major challenges in software development is to match the production deployment on a dev machine. This is critical in order to make sure the issues found on production is reproducible under similar circumstances locally for a developer. It is also a good practice to ensure we develop software and run them on environment similar to production. This makes it a uniform process from development to production and would avoid last minute deployment surprises.

Until recently this was a huge challenge. Also, there were special environments like shared development or integration environments created for this purpose. But maintenance of these environments became overwhelming with the introduction of multiple distributed technologies to the stack that work together. This is when containerization and virtualization came in to solve the problem.

Docker is one such technology that makes it possible to run small scale focused machines on any OS platform to create the same experience as a full blown production environments. Though these machines are not as efficient as real ones, they enable developers to run the setup locally and ensure what they develop is tested and validated locally before it is moved into production. This also enables to write unified scripts for deployment and not to worry about maintaining separate ones by environment.

We went through some SDLC tools that help product teams to accelerate and improve on their development process. There are several other tools and technologies that are used for build, operate and deploy but the ones discussed here are the most important ones and are part of day-to-day developer activities.

SDLC @ Okera

SDLC at Okera employs the above mentioned tools as part of the development process. It has proved to be a very effective process and ensures quality of the product is always maintained. The stability of the product is constant and we could cut a release or patch as and when we wish to. As developers at Okera, we focus on writing quality code and unit tests and ensure the code passes the initial step of Gerrit CR verification. Our process has several benefits,

- Most of the heavy lifting is already done at the Gerrit CR verification phase to certify the regression verification. This validates we are good with the changes upfront than waiting for days before a QA team has to go through manual/automated testing to point out for any issues.

- With small and incremental changes, it becomes easy to break big problems into smaller chunks and address them. This makes it easier to measure and adjust (agile) with mid-point checks in the scrum development cycle.

- These processes avoid to a greater extent multiple release meetings and provides a factual view of the current state of the release because the master branch of the code is most of time good in terms of quality and release readiness. The tickets marked as done by developers are really done because they have been validated by the automation.

- Developers do not involve in the release management meetings and the CD process is automated via Jenkins. This gives back plenty of time for developers to solve real world problem with enough time to innovate and learn.

- We don’t have time consuming, grand scale release nights involving multiple personal. The release is as smooth as any other TGIF 🙂

As with any process, we still have a lot of things to learn and improve upon and we are continuously improving our processes. Our product and features delivered in every release are great testimonies about our SDLC methodologies.